Apple’s Revolutionary On-Device Large Language Model: A Game-Changer for Privacy and Speed

As I delve into the world of artificial intelligence, I’m excited to share with you a groundbreaking development that’s set to revolutionize the way we interact with our devices. Apple is on the cusp of introducing a large language model (LLM) that operates directly on its devices, promising to enhance user privacy and speed.

The Future of AI: On-Device Processing

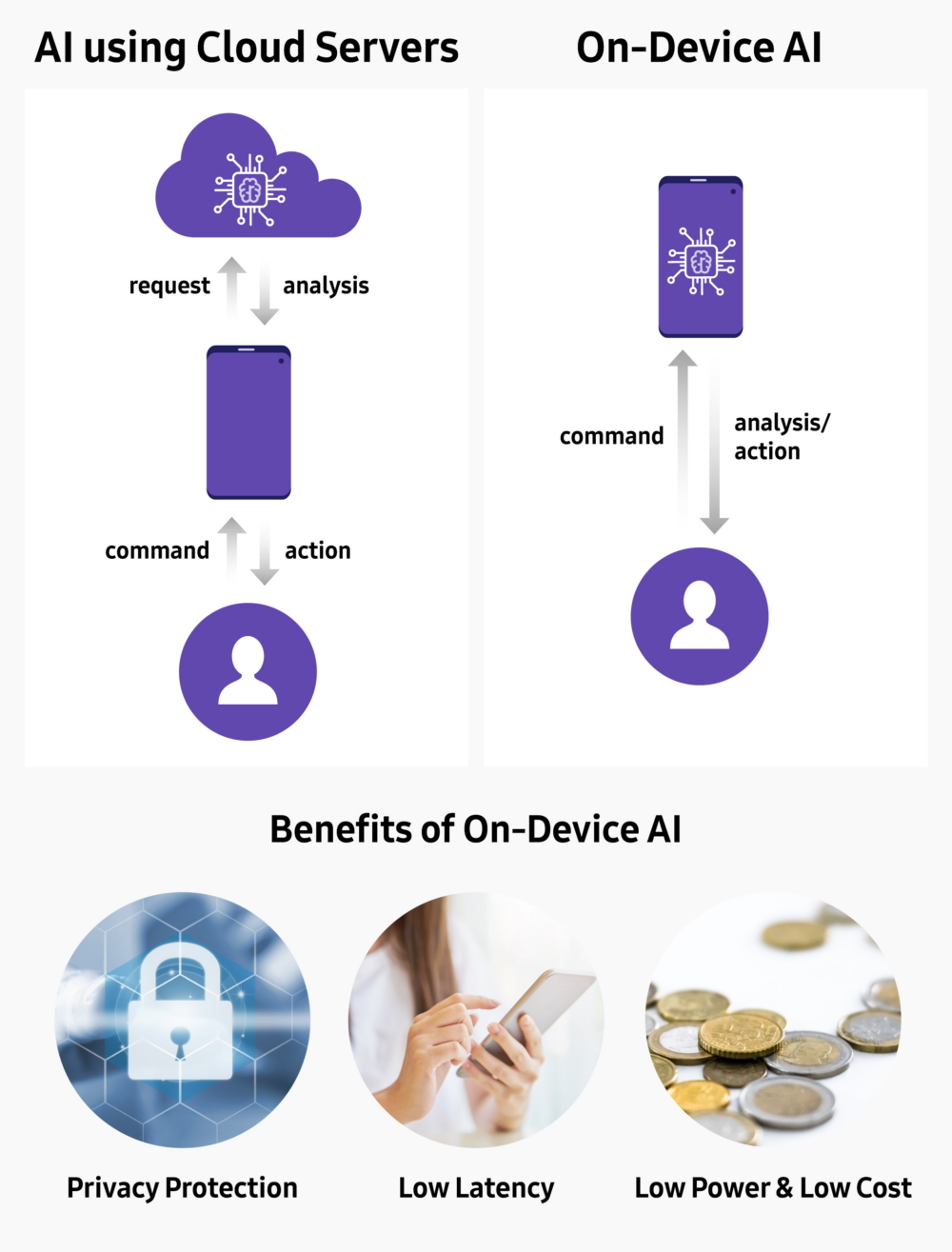

Unlike most current AI systems that rely on cloud processing, Apple’s on-device LLM is designed to function entirely within the device. This approach could potentially limit the model’s capabilities in comparison to those of cloud-based rivals. However, Apple might overcome these limitations by licensing technologies from other leaders in AI, such as Google.

The Benefits of On-Device Processing

The key benefits of processing data directly on the device include faster response times and a greater level of privacy than what cloud-based systems can offer. This approach aligns with Apple’s focus on practical applications that seamlessly integrate into everyday life, rather than simply emphasizing the technology’s power.

A Glimpse into Apple’s AI Ambitions

Recently, discussions about integrating Google’s Gemini AI engine into Apple’s hardware were reported, hinting at a possible collaboration that could blend on-device and cloud processing elements for iOS 18. The full scope of Apple’s AI ambitions is anticipated to be unveiled at the Worldwide Developers Conference (WWDC) in June, where the company typically showcases major software updates.

“The key benefits of processing data directly on the device include faster response times and a greater level of privacy than what cloud-based systems can offer.” - [Me]

Faster response times and enhanced privacy with on-device processing

Faster response times and enhanced privacy with on-device processing

The Road Ahead

As Apple shapes its AI marketing strategy to focus on practical applications, we can expect to see more seamless integrations of AI into our daily lives. The possibilities are endless, and I’m excited to see how Apple’s on-device LLM will revolutionize the way we interact with our devices.

AI integration into everyday life

AI integration into everyday life