Accelerating AI Service Process with Huawei Data Lake Solutions

The AI era is upon us, and with it comes a plethora of challenges that need to be overcome. One of the most significant hurdles is the preparation of high-quality data, which accounts for a whopping 60% of end-to-end model training time. This is further complicated by the need for diverse data access and the sheer scale of raw data sets.

Preparing high-quality data for AI model training

Preparing high-quality data for AI model training

GPUs are the most critical component for training AI models, and improving GPU utilization is key to reducing costs. However, storage latency often causes GPU idle time, which can account for up to 10% of total GPU execution time. This is further exacerbated by the complexity of the process, which can extend for several months in certain cases.

The Importance of AI-Ready Data Infrastructure

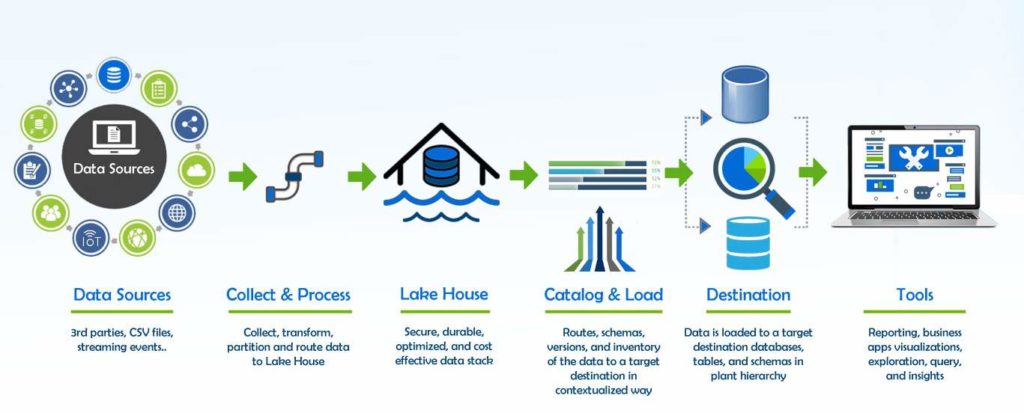

As we enter the AI/LLM era, we need an AI-ready data infrastructure to support both Big Data and AI mixed workloads. This is where Huawei’s AI Data Lake solution comes in, providing a unified data lake storage resource pool with both high-performance and high-capacity tiers. This allows for the flexible expansion of mass data storage and improves LLM training efficiency.

Huawei’s AI Data Lake solution

Huawei’s AI Data Lake solution

The solution also includes an intelligent data tiering feature, which ensures that hot, warm, and cold data are stored in appropriate storage tiers, optimizing total cost of ownership. Data fabric is used to efficiently collect mass data across vendors, regions, and systems, achieving on-demand and secure data mobility.

The AI Advantage

Huawei’s OceanStor A800 is at the heart of their AI Data Lake solution, providing blisteringly fast speeds and an innovative data and control plane separation architecture. This allows data to be directly transferred from interface modules to disks, bypassing bottlenecks created by CPUs and memory.

OceanStor A800, the heart of Huawei’s AI Data Lake solution

OceanStor A800, the heart of Huawei’s AI Data Lake solution

The OceanFS innovative high-performance parallel file system enables OceanStor A800 to achieve a bandwidth of 500 GB/s per controller enclosure. This means that when training a trillion-parameter model, a 5 TB-level checkpoint read can be completed in just 10 seconds, and training can resume from checkpoints three times faster than its nearest competitor.

Sustainability Matters

Finally, sustainability is an important factor for AI infrastructure, and Huawei’s AI Data Center solution matches this requirement well. With higher performance and capacity density, it reduces footprint, helping customers sharply reduce rack space and related cooling requirements.

Sustainable AI infrastructure

Sustainable AI infrastructure