Join Finley Chang as she delves into the groundbreaking collaboration between the University of Michigan and Apple, aimed at revolutionizing AI efficiency.

A New Dawn for AI Efficiency

In a world where artificial intelligence (AI) is increasingly becoming a cornerstone of technological advancement, the quest for efficiency in AI operations has never been more critical. The University of Michigan and Apple have embarked on a pioneering journey to redefine the boundaries of AI efficiency, particularly focusing on large language models (LLMs). This collaboration seeks to address the significant computational demands of LLMs without compromising their effectiveness, marking a significant stride towards sustainable AI development.

A conceptual leap in AI technology

A conceptual leap in AI technology

Strategic Distillation: The Core Innovation

At the heart of this collaboration is a novel approach known as “strategic distillation.” This technique aims to enhance the efficiency of LLMs by breaking down the problem-solving process into two main phases: problem decomposition and problem-solving. By distilling the capability for problem decomposition into smaller models, the team hypothesizes that they can significantly reduce computational demands while maintaining high performance levels. Early experiments have provided compelling evidence in support of this theory, showcasing that distilled models can achieve remarkable performance across various tasks with substantially fewer computational resources.

Impressive Results and Future Implications

The distilled models have demonstrated exceptional generalization capabilities, performing admirably across a wide range of tasks and datasets. Notably, these models have maintained performance levels comparable to their larger counterparts while requiring significantly less computational power. This breakthrough is particularly relevant for tasks involving mathematical reasoning and question answering, where distilled models have shown the ability to maintain high performance levels while drastically reducing inference costs.

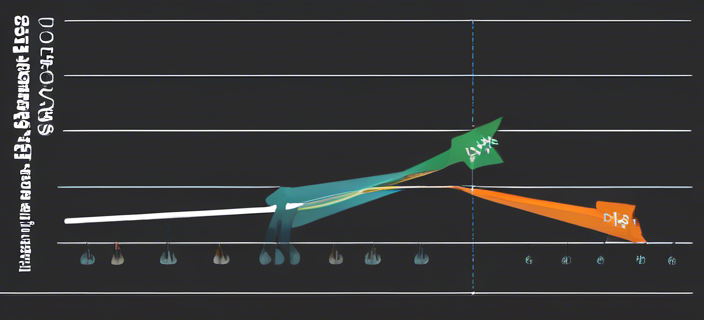

Performance comparison between traditional and distilled models

Performance comparison between traditional and distilled models

Charting the Future of Artificial Intelligence

This groundbreaking research sets the stage for a more efficient and accessible LLM technology. By successfully distilling the decomposition phase of LLMs into smaller models, the University of Michigan and Apple have unlocked new possibilities for the application of these powerful tools across various industries. The implications of this research are vast, promising to catalyze further exploration into optimizing LLMs for diverse applications, thereby accelerating the adoption of LLM technology in a sustainable and impactful manner.

As we stand on the brink of a new era in artificial intelligence, the insights garnered from this project underscore the potential of targeted distillation as a strategy to enhance model efficiency. This advancement opens a gateway to a future where LLMs can be leveraged more broadly, driving innovation and transformation across a spectrum of fields and research domains.

In conclusion, the collaboration between the University of Michigan and Apple represents a significant milestone in the quest for AI efficiency. By pioneering a strategic distillation process, they have not only demonstrated the potential for reduced computational demands but also opened up new avenues for the application of LLMs. As we move forward, the impact of this research on the broader AI landscape remains a promising frontier, heralding a new chapter in the evolution of artificial intelligence.

Photo by

Photo by